Photo by Alina Grubnyak on Unsplash

Kubernetes Services: NodePort, LoadBalancer

load balancing, routing, and discovery capabilities

Introduction :

In the world of Kubernetes, where containerized applications thrive, ensuring seamless access to these applications is crucial. This is where Kubernetes services come into play. Services bridge users and applications, providing load balancing, routing, and discovery capabilities. In this blog, we will explore the importance of Kubernetes services and how they enable users to access applications seamlessly, regardless of changes in pod IP addresses.

Problem Understanding:

As we know that there are deployments in k8s that ultimately create pods with the help of a replica set. Let's say we have 3 replicas of the pod and 10000 concurrent users are using our application. If all these users are giving requests and all these requests are going to one pod, then there will be an overload for that particular pod. And if this pod gets down, even before it goes down, the process of creating a new pod is initiated, but the issue is the Ip address of the new pod is different than the pod which got down. This can lead to a problem in which the application is not reachable by the user. In real life, the user hardly knows the Ip address of the pod to access the application. All traffic routing to different instances is handled by load balancers. So the ultimate need for services in k8s is to access the pods seamlessly. Even though one of the pods goes down and the new pod with a different Ip address takes its place user should be able to access the application running in this new pod.

Solution:

When we create a deployment in the K8s cluster, it does a service for that deployment. This service has the format of deployment_name-namespace_name-svc for example minio-minio_dev-svc So the application running inside containers can be accessed via this service Ip address, and this service acts as a load balancer and routes the traffic accordingly. Now let's clear the possible doubt; we can see that service is nothing but a middleman between user and application access. services are simply a single point of contact from which all the requests are distributed to respective pods. But when a new pod is coming up, it is having different Ip address than that of one that went down. So service must face the same issue of sending requests to the wrong Ip address as it was faced in the above discussion. This problem is solved by utilizing the concept of service discovery.

Let's understand how service discovery works. If I have to explain it in simple words, the service does not keep track of the Ip addresses of pods. It uses labels and selectors. As in the above example, we are having deployment named as minio and let's say this deployment has 3 replicas, i.e., three pods are running; what service discovery does is it labels all these 3 pods as minio so now, regardless of the Ip address of pod services can send requests to these labeled pods.

Another feature which possible due to services in K8s is exposing the application access to the external world, i.e., outside the cluster network. Let's say we are using uber.com for booking a ride, so Uber will never tell its user that the user has to log in to the cluster network to access the application. Users will simply go to uber.com to access the application. This is achieved by exposing the application access outside of the k8s cluster, and this is done using service. We have three options while creating a service to expose application access.

Cluster Ip: This is the expected behavior of service in which applications can only be accessed from within the cluster. The only benefits we get by using this mode are discovery and load balancing. To access the application in Cluster Ip services, it is the requirement that you are present in the cluster. This can be done by doing

sshto cluster.NodePort: This is a type of service that allows access to a specific application running within a Kubernetes cluster from outside the cluster. It exposes a port on every node in the cluster, and any traffic sent to that port will be forwarded to the associated service. In this, it is not required to have access to the cluster to access the application, but it is required that they have access to the node.

Load balancer: It is a type of service that provides external access to applications running within a cluster, similar to a NodePort service. However, unlike NodePort, Load Balancer services can take advantage of cloud provider-specific load balancers to distribute traffic across multiple nodes. Let's say we are using EKS (Elastic Kubernetes Service) offered by AWS. We get ELB (Elastic Load Balancer) Ip address for the specific service. We can use this public Ip address to access the application from the external world. The component which makes the Ip address of ELB available is called CCM (Cloud Control Manager)

Demo:

Let's deploy the Python application on the k8s cluster, i.e., the minikube cluster. We are going to use this GitHub repository in which we have a Python application.

Creating Deployment:

We have a ready Docker File in this repository, which we can use to build a Docker image, and then we will deploy it as deployment on the minikube cluster.

After that, we are going to write

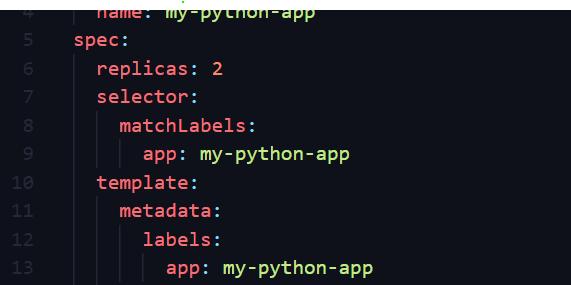

deployment.yamlfile, which will contain the following codeFROM ubuntu WORKDIR /app COPY requirements.txt /app COPY devops /app RUN apt-get update && \ apt-get install -y python3 python3-pip && \ pip install -r requirements.txt && \ cd devops ENTRYPOINT ["python3"] CMD ["manage.py", "runserver", "0.0.0.0:8000"]In this YAML file, we declare that this is of type deployment by which k8s understand this should be handled as deployment, which gives us features such as auto scaling and auto healing. Also, we declare the label to this as python-app; this label will be used by the service for discovery which we discussed in the above section.

And that's it. We can now run the following command to create a deployment

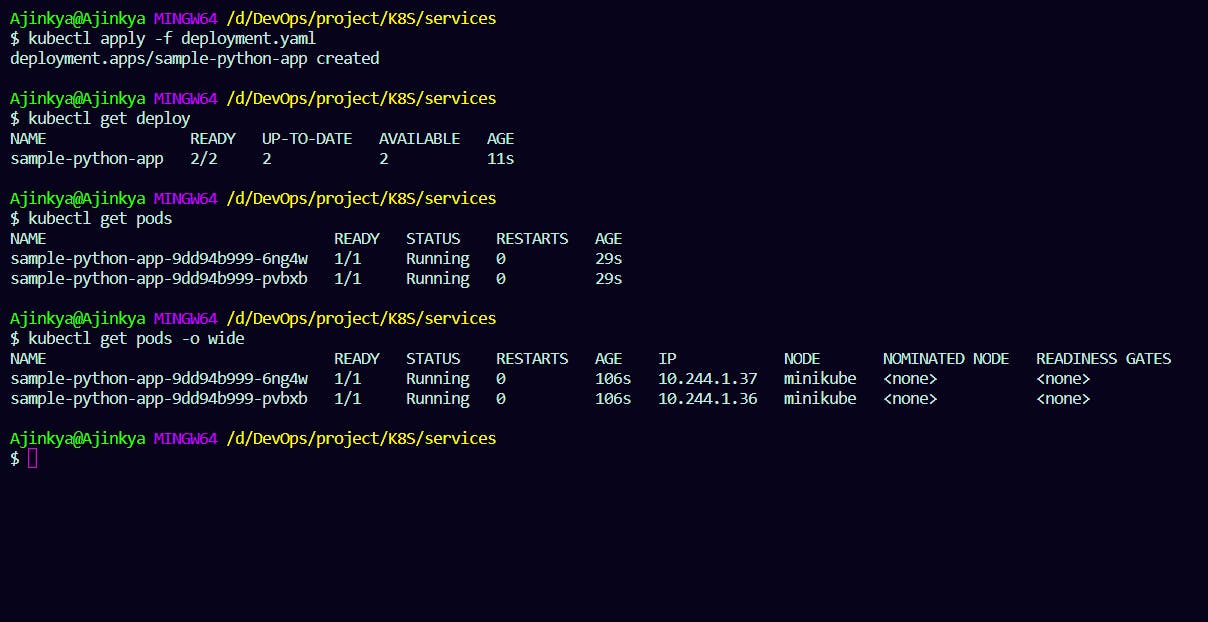

kubectl apply -f deployment.yamlOutput:

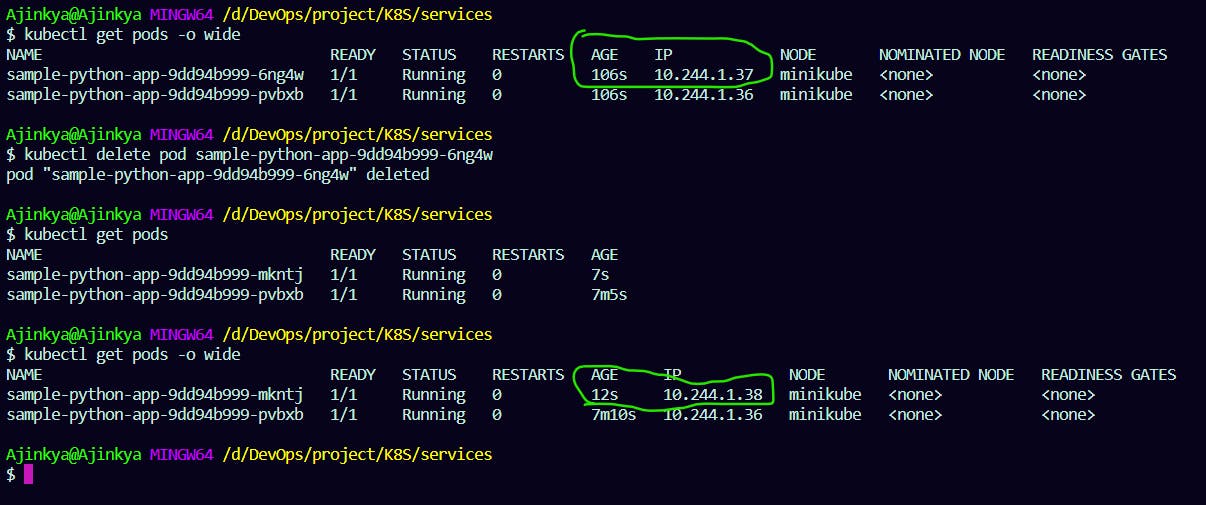

Auto healing services:

As we have created a deployment, we have auto healing services which mean even if we delete a pod, another pod will be automatically created, but this time, it will have the different Ip address

kubectl delete pod <pod_id>

Label and Selectors:

So now, if we want to access this newly created pod with a new Ip address automatically, we require a discovery service that can automatically identify the pod and drive traffic to it. This is where K8s services come in to identify pods using concepts of labels and selectors.

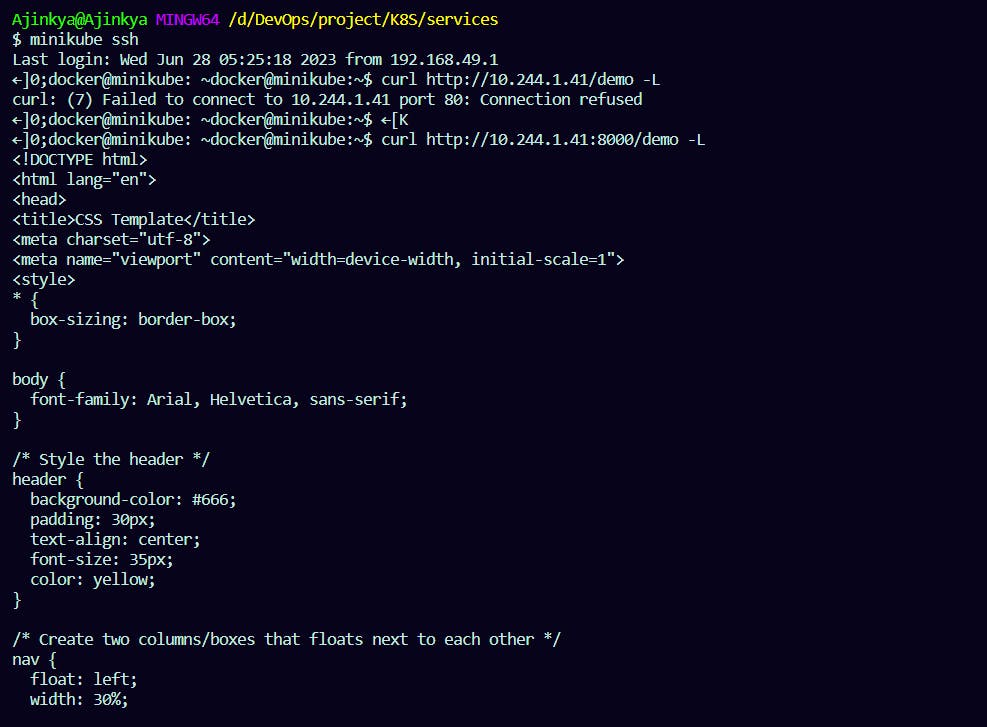

Accessing app from Cluster:

We can ssh to minikube to access the application

minikube ssh curl http://<pod_Ip>:8000/demo -LOutput:

But if we try to access our application outside the cluster, we will observe that there is no traffic at all. This can be solved using services.

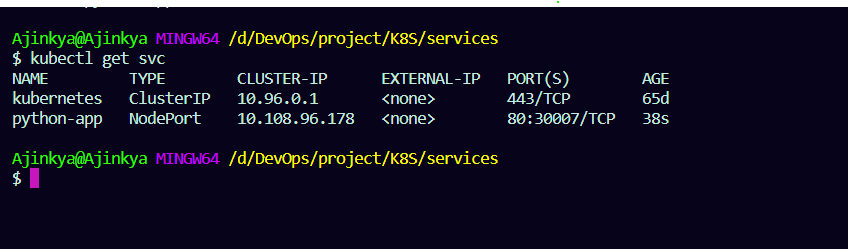

Creating Service:

NodePort Mode:

We are going to write service.yaml file, which will contain configuration for service of type NodePort

apiVersion: v1 kind: Service metadata: name: python-app spec: type: NodePort selector: app: my-python-app ports: # By default and for convenience, the `targetPort` is set to the same value as the `port` field. - port: 80 targetPort: 8000 # Optional field # By default and for convenience, the Kubernetes control plane will allocate a port from a range (default: 30000-32767) nodePort: 30007kubectl apply -f service.yaml

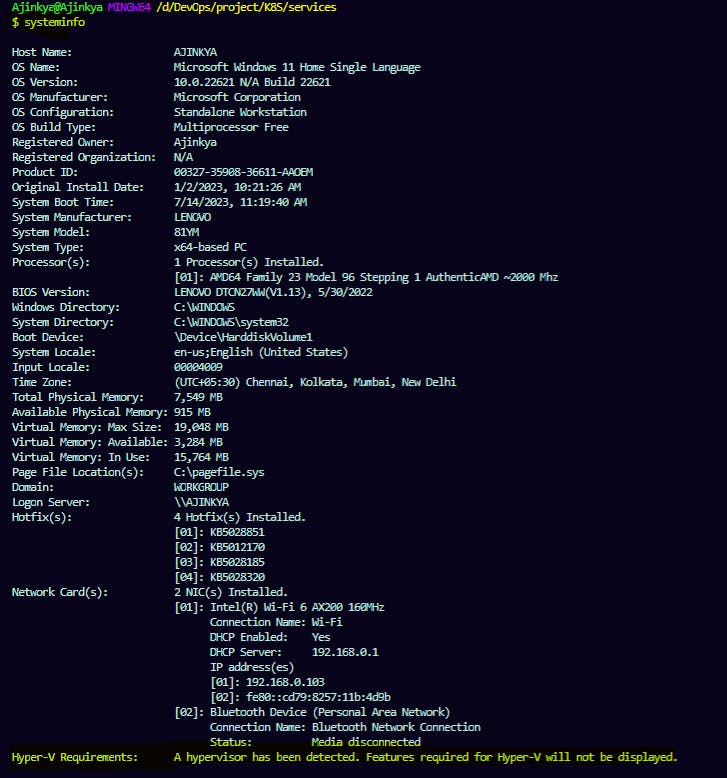

Note: If you are using docker driver for minikube on your local machine then there are some issues with networking and service is unreachable hence try to use driver=hyperv for minikube on local machine.

How to use Hyper-V for minikube on windows 11: prerequisite:

enabled virtualization by updating BIOS

Microsoft Hyper-V must be installed and enabled via the Windows 10 operating system.

Search for Control Panel. Select Control Panel. Select Programs and Features (View by: Large icons). Select Turn Windows features on or off.

Select both Hyper-V Management Tools and Hyper-V Platform options. Click OK and follow the screen prompts. Reboot if necessary

ways to setup hyper-v in windows 11 (if not showing in Turn Windows features on or off) : https://www.youtube.com/watch?v=V1feRcFlvXA

Use following command to start minikube using hyper-v driver.

minikube start --driver=hyperv

Load Balance Mode:

Load Balance mode is supported on cloud platforms. To modify our service to load balance mode, all we have to do is replace a word

NodePortinservice.yamlwithLoadBalance. This will assign an external Ip address using which we can access the application.

Conclusion:

In conclusion, Kubernetes services are crucial for seamless access to applications running in pods. They act as a middleman between users and the application, providing load balancing and routing of traffic to the appropriate pods. Services use labels and selectors to dynamically discover and route requests to pods, regardless of their IP addresses.

When a pod goes down and a new one is created, services ensure that the application remains accessible by directing traffic to the newly created pod, even if it has a different IP address. This solves the problem of users being unable to reach the application when pods change.

Additionally, services in Kubernetes offer different modes for exposing application access to the external world. The three common modes are:

Cluster IP: This mode allows access to the application only from within the cluster. It provides discovery and load-balancing features but requires users to be present within the cluster network to access the application.

NodePort: This mode enables access to a specific application from outside the cluster by exposing a port on every node in the cluster. Traffic sent to that port is then forwarded to the associated service. Users don't need direct cluster access but require access to the node where the application is running.

LoadBalancer: This mode provides external access to applications running within a cluster, similar to NodePort. However, it leverages cloud provider-specific load balancers to distribute traffic across multiple nodes. It assigns a public IP address, such as an Elastic Load Balancer (ELB) in AWS, for accessing the application from the external world.

By leveraging services and the appropriate access mode, Kubernetes ensures that applications remain reachable and scalable, even with dynamic pod lifecycles and changes in IP addresses. Services enable seamless access to applications running in pods, enhancing the overall reliability and user experience in Kubernetes environments.